Snap Performance Skunk Works – Ensuring speed and consistency for snaps

Igor Ljubuncic

on 16 September 2021

Tags: benchmark , checkbox , improvement , Performance , snapcraft.io , Snaps , speed , testing

Snaps are used on desktop machines, servers and IoT devices. However, it’s the first group that draws the most attention and scrutiny. Due to the graphic nature of desktop applications, users are often more attuned to potential problems and issues that may arise in the desktop space than with command-line tools or software running in the background.

Application startup time is one of the common topics of discussion in the Snapcraft forums, as well as the wider Web. The standalone, confined nature of snaps means that their startup procedure differs from the classic Linux programs (like those installed via Deb or RPM files). Often, this can translate into longer startup times, which are perceived negatively. Over the years, we have talked about the various mechanisms and methods introduced into the snaps ecosystem, designed to provide performance benefits: font cache improvements, compression algorithm change, and others. Now, we want to give you a glimpse of a Skunk Works* operation inside Canonical, with focus on snaps and startup performance.

Checkbox

While speed improvements are always useful and warmly received by the users, consistency of results is equally (if not more) important. A gain of a second is often less beneficial than the loss of that same second later on in the software’s lifecycle. An application whose startup time has improved is expected to remain that way, and users will typically respond with greater negativity to any new time delay than they had to the original manifestation of the issue.

Performance-related regressions present a difficult challenge, and they tie into two main aspects of software development: actual, tangible changes in the code, and the overall understanding and control of the system.

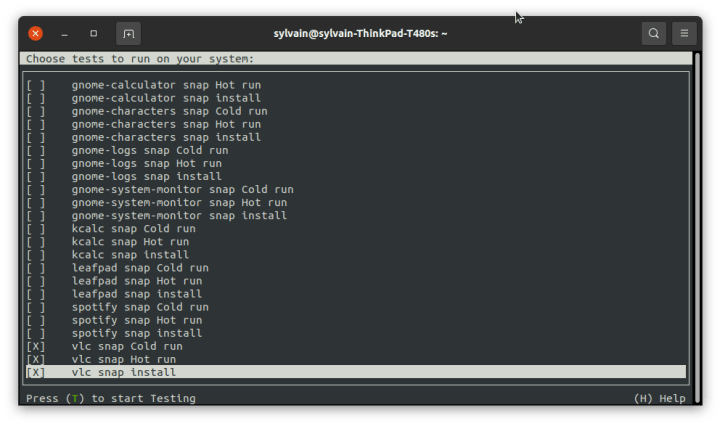

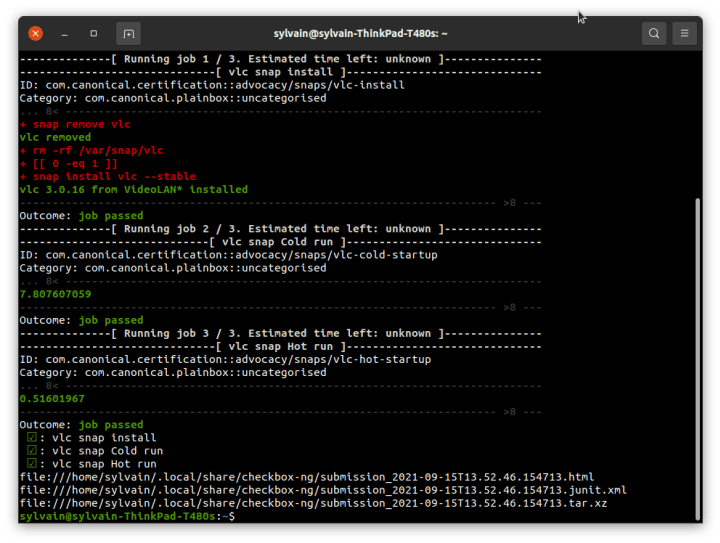

To address these, Canonical’s Certification team uses the Checkbox test automation software suite to perform a range of hands-off regression and performance tests for different Canonical products. The tool offers a great deal of flexibility, including custom tasks and reporting. Snap testing is also available through the checkbox-desktop-snaps utility (also distributed as a snap).

By default, Checkbox will measure the cold (no cached data) and hot (cached data) startup times of 10 prominent desktop snaps on multiple hardware platforms, and report the results. But things really get interesting when we look at the environment setup.

Interaction between system and snap

Regardless of the technology and tooling used, measuring execution times in software can be tricky, because it is difficult to separate (or sanitize) the application in question from the overall system. A program that has network connectivity may report inconsistent results depending on the traffic throughput and latency. Different disk types and I/O activity will also affect the timing. There may be significant background activity on the machine, which can also introduce noise, and skew the results. The list of possible obstacles goes on and on.

In situations like these, which are designed to simulate real-life usage conditions, the idea is not to ignore or remove the common phenomena, but to normalize them in a way that will offer reliable results. For example, repeated testing during different times of the day can remove some of the variation in results related to network or disk activity.

With Checkbox and snaps, we decided to go one step further, and that is to also directly examine the impact both the operating systems and the snaps themselves have on the startup measurement results!

One change at a time

Before we can claim full understanding of the system, we need to understand how different components interact. With snaps, there are many variables that come into play. For instance, if a snap refreshes and receives an update, can we treat the new startup results as part of the same set as earlier data, or a brand new set? If there is a kernel update, can we or should we expect snap startup times not to change?

Isolating the different permutations of a typical Linux machine is not trivial. To that end, we decided to create two distinct sets of tests:

- Immutable systems that do not have any updates, and only the installed snaps change through periodic refreshes. Whenever there is a snap update, the Checkbox testing starts, and new data is collected. This way, it is possible to determine whether any change in the startup times, for better or worse, stems from the actual changes in the snap applications.

- Immutable snaps tested on systems that receive updates. Here, we keep snaps pegged to a specific version (e.g.: Firefox 89, VLC 3.0.8), and then trigger testing whenever there is a system change in one of the five critical components: kernel, glibc, graphics drivers, apparmor, and snapd. This way, we can correlate any changes in the startup behavior of one or more snaps to the system updates.

We run the tests with multiple configurations in place:

- Hardware with both different graphics cards.

- Hardware with mechanical disks and SSD.

- Supported LTS releases and the latest development image.

The extensible nature of the Checkbox tool allows the inclusion of any snaps, any number of snaps, and custom tests can also be added, if needed. For instance, on top of the startup times, the tool can collect screenshots, which then also allow for visual comparison of the results, like possible inconsistencies in theming among different snaps, desktop environments, and different versions of desktop environments.

From data to control

When we first started collecting the numbers on startup times, we focused on the actual figures. However, in the larger scheme of things, these values are less important than the relative differences of the collected results under different conditions for the same snaps, on the same hardware configuration. For instance, how does a snap startup time change when moving from one LTS image to another? Do kernel updates affect the results?

Once we can establish how snaps behave under various operational conditions, we can then create a baseline. Minimum and maximum values, average times, and other parameters, for which we can create alerts. This will allow us to identify any potentially bad results in a snap behavior, as part of our testing, and immediately flag system changes (or snap refreshes) that may lead to a degraded user experience.

Summary

Snap startup time data collection and analysis goes beyond just making sure the snaps launch quickly, and that users have a good experience. The mechanism also allows us to much better understand the complex interaction between hardware and software, and different operating system components. As we expand our work with the Checkbox tool, we will be able to create complex formulas that tell us how kernel updates, system patches, or perhaps snap refreshes affect the startup performance. We already know that using the LZO compression for snap packaging can lead to 50-60% improvements. Perhaps adding a new library into a snap can make a big difference? Or maybe certain distro releases are faster than others?

At the moment, Checkbox is designed to work under the GNOME desktop environment, but we also have test builds that can collect data on KDE and Xfce, too. We’re constantly improving the framework, and we’re looking for ways to improve its usability – easier sideloading of tests, test customization, configuration, data export, etc. If you have any comments or ideas, please join our forum, and let us know.

Article written by Igor Ljubuncic and Sylvain Pineau.

* Skunk Works is an official pseudonym for Lockheed Martin’s Advanced Development Programs (ADP), formerly called Lockheed Advanced Development Projects, coined in the 1940s, and since widely adopted by business and companies for their cool, out-of-band, secretive, or state-of-art projects.

Photo by lalo Hernandez on Unsplash.

Talk to us today

Interested in running Ubuntu in your organisation?

Newsletter signup

Related posts

Managing software in complex network environments: the Snap Store Proxy

As enterprises grapple with the evolving landscape of security threats, the need to safeguard internal networks from the broader internet is increasingly...

Improving snap maintenance with automation

Co-written with Sergio Costas Rodríguez. As the number of snaps increases, the need for automation grows. Any automation to help us maintain a group of snaps...

Snapcraft 8.0 and the respectable end of core18

‘E’s not pinin’! ‘E’s passed on! This base is no more! He has ceased to be! ‘E’s expired and gone to meet ‘is maker! ‘E’s a stiff! Bereft of life, ‘e rests in...